fatf.transparency.predictions.surrogate_explainers.TabularBlimeyTree¶

-

class

fatf.transparency.predictions.surrogate_explainers.TabularBlimeyTree(dataset: numpy.ndarray, predictive_model: object, as_probabilistic: bool = True, as_regressor: bool = False, categorical_indices: Optional[List[Union[str, int]]] = None, class_names: Optional[List[str]] = None, classes_number: Optional[int] = None, feature_names: Optional[List[str]] = None, unique_predictions: Optional[List[Union[str, int]]] = None)[source]¶ A surrogate explainer based on a decision tree.

Changed in version 0.1.0: Added support for regression models.

New in version 0.0.2.

This explainer does not use an interpretable data representation (as one is learnt by the tree). The data augmentation is done with Mixup (

fatf.utils.data.augmentation.Mixup) around the data point specified in theexplain_instancemethod. No data weighting procedure is used when fitting the local surrogate model.When

as_regressoris set toTrue, a surrogate regression tree is fitted to a black-box regression. When it is set toFalse,predictive_modelis treated as a classifier. When the underlying predictive model is probabilistic (as_probabilistic=True), the local decision tree is trained as a regressor of probabilities output by the black-boxpredictive_model. When thepredictive_modelis a non-probabilistic classifier, the local decision tree is a classifier that is either fitted as one-vs-rest for a selected class or mimics the classification problem by fitting a multi-class classifier.The explanation output by the

explain_instancemethod is a simple feature importance measure extracted from the local tree. Alternatively, the local tree model can be returned for further processing or visualising.Since this explainer is based on scikit-learn’s implementation of decision trees, it does not support structured arrays and categorical (text-based) features.

For additional parameters, warnings and errors please see the documentation of the parent class:

fatf.transparency.predictions.surrogate_explainers.SurrogateTabularExplainer.- Attributes

- augmenterfatf.utils.data.augmentation.Augmentation

The augmenter class (

fatf.utils.data.augmentation.Mixup) used for local data sampling.

- Raises

- ImportError

The scikit-learn package is missing.

- TypeError

The

datasetparameter is a structured array or thedatasetcontains non-numerical features.

Methods

explain_instance(data_row, numpy.void], …)Explains the

data_rowwith decision tree feature importance.-

explain_instance(data_row: Union[numpy.ndarray, numpy.void], explained_class: Union[int, str, None] = None, one_vs_rest: bool = True, samples_number: int = 50, maximum_depth: int = 3, return_models: bool = False) → Union[Dict[str, Dict[str, float]], Tuple[Dict[str, Dict[str, float]], Union[Dict[str, fatf.utils.models.models.Model], fatf.utils.models.models.Model]]][source]¶ Explains the

data_rowwith decision tree feature importance.If the black-box model is a classifier, the explanations will be produced for all of the classes by default. This behaviour can be changed by selecting a specific class with the

explained_classparameter. For black-box classifiers, the local tree is learnt as a one-vs-rest (for one class at a time or only for the selected class) model by default. This is a requirement for probabilistic black-box models as the local model has to be a regression (tree) of probabilities for a selected class. However, when the black-box model is a non-probabilistic classifier, the local tree can either be learnt as one-vs-rest or multi-class (chosen by setting theone_vs_restparameter). The depth of the local tree can also be limited to improve its comprehensiveness by setting themaximum_depthparameter.The data sampling around the

data_rowcan be customised by specifying the number of points to be generated (samples_number). By default, this method only returns feature importance, however by settingreturn_modelstoTrue, it will also return the local tree surrogates for further analysis and processing done outside of this method.For additional parameters, warnings and errors please see the parent class method

fatf.transparency.predictions.surrogate_explainers.SurrogateTabularExplainer.explain_instance.- Parameters

- data_rowUnion[numpy.ndarray, numpy.void]

A data point to be explained (1-dimensional numpy array).

- explained_classUnion[integer, string], optional (default=None)

The class to be explained. This parameter is ignored when the black-box model is a regressor. If

None, all of the classes will be explained. For probabilistic (black-box) models this can either be the index of the class (the column index of the probabilistic vector) or the class name (taken fromself.class_names). For non-probabilistic (black-box) models this can either be the name of the class (taken fromself.class_names), the prediction value (taken fromself.unique_predictions) or the index of any of these two (assuming the lexicographical ordering of the unique predictions output by the model).- one_vs_restboolean, optional (default=True)

A boolean indicating whether the local model should be fitted as one-vs-rest (required for probabilistic models) or as a multi-class classifier. This parameter is ignored when the black-box model is a regressor.

- samples_numberinteger, optional (default=50)

The number of data points sampled from the Mixup augmenter, which will be used to fit the local surrogate model.

- maximum_depthinteger, optional (default=3)

The maximum depth of the local decision tree surrogate model. The lower the number the smaller the decision tree, therefore making it (and the resulting explanations) more comprehensible.

- return_modelsboolean, optional (default=False)

If

True, this method will return both the feature importance explanation dictionary and a dictionary holding the local models. Otherwise, only the first dictionary will be returned.

- Returns

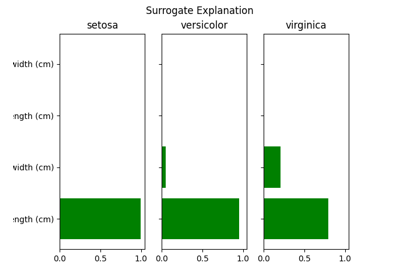

- explanationsDictionary[string, Dictionary[string, float]]

A dictionary holding dictionaries that contain feature importance – where the feature names are taken from

self.feature_namesand the feature importances are extracted from local surrogate trees. These dictionaries are held under keys corresponding to class names (taken fromself.class_names).- modelssklearn.tree.tree.BaseDecisionTree, optional

A dictionary holding locally fitted surrogate decision tree models held under class name keys (taken from

self.class_names). This dictionary is only returned when thereturn_modelsparameter is set toTrue.

- Raises

- RuntimeError

A surrogate cannot be fitted as the (black-box) predictions for the sampled data are of a single class or do not have the requested class in case of the one-vs-rest local model (only applies to black-box models that are non-probabilistic classifiers (

self.as_probabilistic=Falseandself.as_regressor=False).- TypeError

The

explained_classparameter is neitherNone, a string or an integer. Theone_vs_restparameter is not a boolean. Thesamples_numberparameter is not an integer. Themaximum_depthparameter is not an integer. Thereturn_modelsparameter is not a boolean.- ValueError

The

samples_numberparameter is not a positive integer (larger than 0). Themaximum_depthparameter is not a positive integer (larger than 0). Theexplained_classparameter is not recognised. For probabilistic (black-box) models this means that it could neither be recognised as a class name (self.class_names) nor an index of a class name. For non-probabilistic (black-box) models this means that it could neither be recognised as on of the possible predictions (self.unique_predictions) or a class name (self.class_names) nor as an index of either of these two.

- Warns

- UserWarning

The

one_vs_restparameter was set toTruefor an explainer that is based on a probabilistic (black-box) model. This is not possible, hence theone_vs_restparameter will be overwritten toFalse. Choosing a class to be explained (via theexplained_classparameter) is not required when requesting a multi-class local classifier (one_vs_rest=False) for a non-probabilistic black-box model since all of the classes will share a single surrogate.